How to Turn Unstructured Legal Data Into an AI Moat

AmLaw 25 firms have learned an important lesson this year — the most significant AI advantage doesn’t come from the smartest model, but from the data it’s trained on.

LLM wrappers by themselves can dazzle in a demo, but they aren’t designed to leave you with consistent, reusable knowledge assets. In other words, they can’t make your systems smarter. For that, you need structured data: legal knowledge that’s broken down into predictable fields, types and relationships that your systems can actually reason over.

When LLMs, search, and analytics can understand legal data in the same way, portfolio‑level questions and defensible reporting become a reality. This is exactly what Syntracts is built to do — turning decades of contracts into structured, queryable intelligence that compounds value for large firms over time.

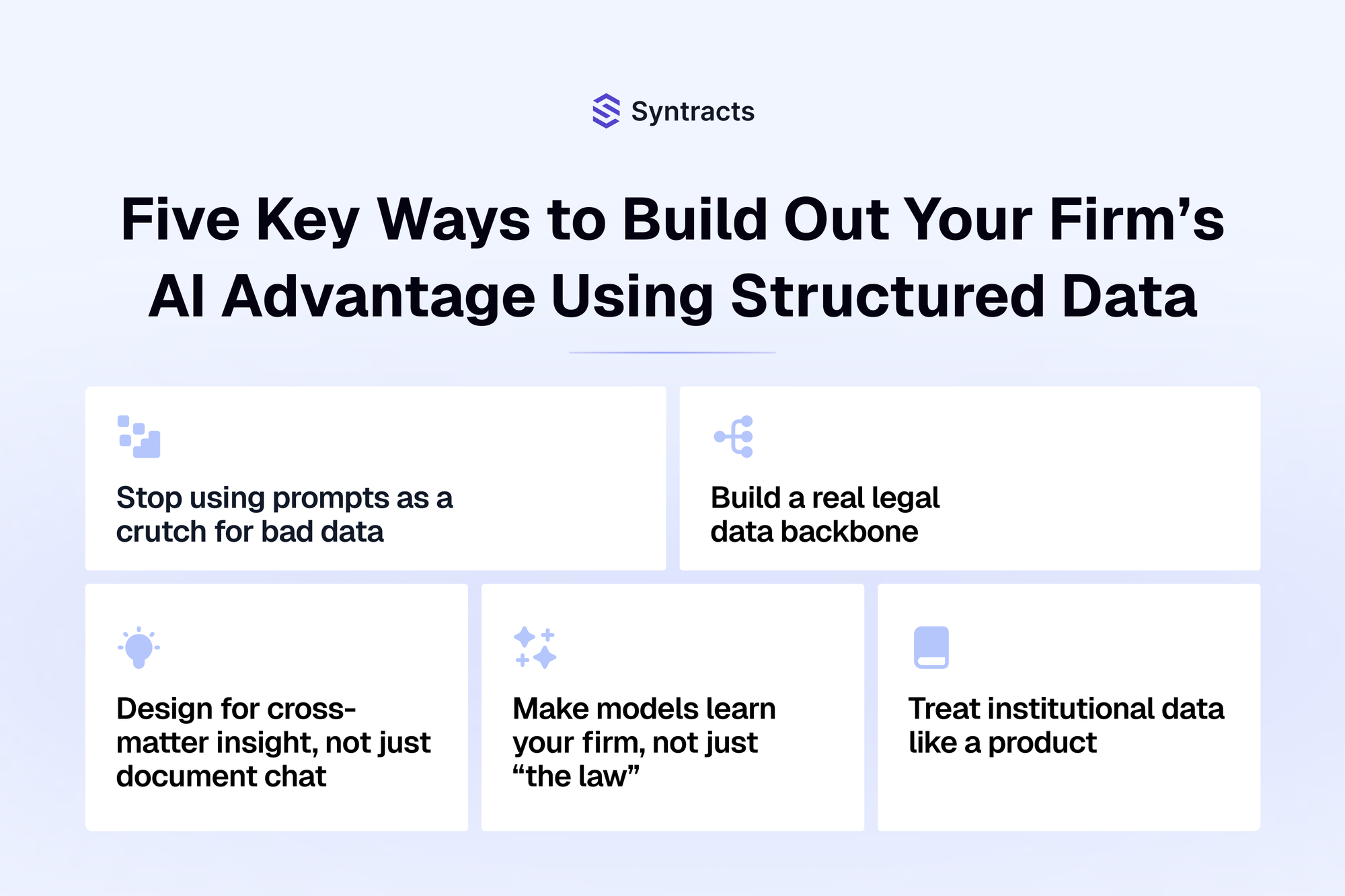

Here are five key ways leading firms are structuring data to build out that advantage.

1. Stop using prompts as a crutch for bad data

Most “AI for lawyers” products are really prompt packs on top of messy data. They handle “What’s in this contract?” but fall apart on “How did this clause trend over the last decade across our tech deals?” because there is no persistent, queryable representation of those clauses.

Don’t try to paper over unstructured information with ever-longer instructions. Leading firms treat prompts as a UX layer on top of real data architecture (i.e. schemas, taxonomies, ontologies, etc.). This way, every review and drafting assist leaves behind structured, reusable data instead of disappearing into chat history.

2. Build a real legal data backbone

Without a shared infrastructure layer, every AI tool quietly invents its own mini data model. That’s how firms end up with silos, duplicate facts, and inconsistent risk rules.

Serious governance starts with a legal data backbone. This shared layer defines canonical schemas, access controls and lineage across DMS, KM, finance, and matter systems. Once it’s in place, role-based access, provenance tracking, and privacy compliance all become possible. AI tools act as interchangeable front-ends on top of the same trusted core so that swapping vendors doesn’t strand your knowledge or weaken your security posture.

3. Design for cross-matter insight, not just document chat

Most tools are great at “chat with this document” and mediocre at “tell me what’s happening across our entire portfolio.” They can’t tell you which indemnity caps you accepted in all tech deals over $100M over the last five years, or how those decisions correlated with disputes.

Don’t settle for AI that only works one document at a time. To answer portfolio-level questions on demand, you need key fields (caps, baskets, survival), normalized clause taxonomies, and consistent metadata (matter type, sector, jurisdiction) applied across matters, not just within a single deal folder. With that structure in place, AI becomes the accelerator for populating and querying it at scale.

4. Make models learn your firm, not just “the law”

Many “legal-tuned” tools are still generic LLMs pointed at your DMS with prompt engineering and a slick UI.They don’t reliably mirror your firm’s risk tolerances, preferred positions, or sector-specific nuance because there’s no curated, firm-specific training corpus backing them.

Invest in golden datasets and tagging schemes. Doing so enables your firm to capture what “buyer-friendly,” “market,” or “high-risk” actually means in your house style. With that foundation, models start to behave differently — turning institutional knowledge into a defensible moat.

5. Treat institutional data like a product

Most firms’ work product contains decades’ worth of deals that are technically “saved” but practically unusable. In an AI-first world, that’s wasted leverage.

Stop putting your institutional data in a graveyard. Instead, run it like a product with owners, roadmaps and clear quality bars. Name real “customers” for your data like deal teams, litigators, and pricing, then design the structure around questions they ask every week: What positions do we usually take? How did that risk allocation play out? When you can track adoption and measure impact on realization, win rates, and client retention, your knowledge base compounds in value instead of accumulating one-off AI outputs.

From shiny tools to a durable system

These strategies shift your AI program from chasing point solutions to building a durable, firm-specific legal knowledge system. In 2026, that system will determine which firms turn AI into a real strategic edge.

Syntracts was built for exactly this shift. We turn messy contract libraries into secure, cross-matter intelligence that your models can trust and your partners can act on. With on-prem deployment and a security model designed for AmLaw 25 risk profiles, Syntracts captures clause-level structure, enforces governance, and feeds that data back into the tools your lawyers already use all without sending sensitive content to someone else’s cloud.

Request a demo to see how these strategies look in practice at your firm .